This is a note on denotational semantics, undergirded by an extended exploration of domain theory, which provides the theoretical underpinning for the former.

References

This explanation incorporates notes from the following sources:

- Winskel 5: The denotational semantics of Imp

- Winskel 6, 7, 8: Axiomatic semantics and domain theory, specifically §8

- Winskel’s Lecture Notes on Denotational Semantics for Part II of the Computer Science Tripos

- Models of Typed Calculus, from Mitchell’s Foundations for Programming Languages

- this answer on PL StackExchange

- Necula, CS 263 - Introduction to Denotational Semantics

For a basic definition of and explainer of denotational semantics, see Winskel 5: The denotational semantics of Imp and this other StackExchange answer. These notes onwards go over dealing with recursive constructs in denotational semantics with domain theory and partial orders.

Dealing with recursive constructs

In A denotational semantics for IMP, we defined the denotation for while in terms of a recursive equation, that we resolved by finding the fixed point of operators on sets—in this case, the fixed point of repeated set unions. In particular, we defined an operation

The proof for showing that such a fixed point exists at all involves showing that every time we call

In this case, we could get away of seeing the semantics of while as the fixed point of doing a bunch of set unions. However, this will not always be possible:

In Section 5.4 we shall introduce a general theory of fixed points, which makes sense when the objects defined recursively are not sets ordered by inclusion.

More generally, we’re not just interested in sets. What we’re actually interested in is some notion of some function having more or less information than another function. And actually since the denotation of some syntax might be any mathematical object, not just a function, we’re interested in the general notion of a mathematical object having more or less information than another object.

This general notion of information-having is a partial ordering, which is defined and dealt with in a branch of abstract math called domain theory. Domain theory gives us the language to generalize the idea of “using fixpoints to find sets that denote recursive constructs” beyond just sets, but to any mathematical object that might denote some construct in a language of our choosing. The lecture notes summarize the key insight behind this perspective:

The key idea is to consider a partial order between the mathematical objects used as denotations—this partial order expresses the fact that one object is approximated by, or carries more information than, or is more well-defined than another one below it in the ordering. Then the minimal solution of a fixpoint equation can be constructed as the limit of an increasing chain of approximations to the solution. (Lecture notes, 4)

Basically, we create a partial ordering between functions that provides mathematical structure for this intuition of “having more information.” Then, we create a chain of partial orderings (analogous to the

Partial orders in the abstract

First, let’s define partial orders in the abstract. This won’t have much meaning for now, but trust me, we’ll see how these operations become useful and can become imbued with meaning in the next sections. Like monads!

A partial order

- Reflexivity:

- Transitivity:

- Anti-symmetry:

Domain theory in a worked example

Let’s walk through how we might find the denotation for a while loop that calculates the factorial of a number n:

x = n

y = 1

while x > 0:

y = x * y

x = x - 1

In this case, our state

This function is analogous to

Basically,

This is because we can’t call

And so on. In general, we have:

Bringing back partial orderings

If we’re looking at these functions, we can intuit that

In other words,

Let’s do some more abstract definitions. Given a poset

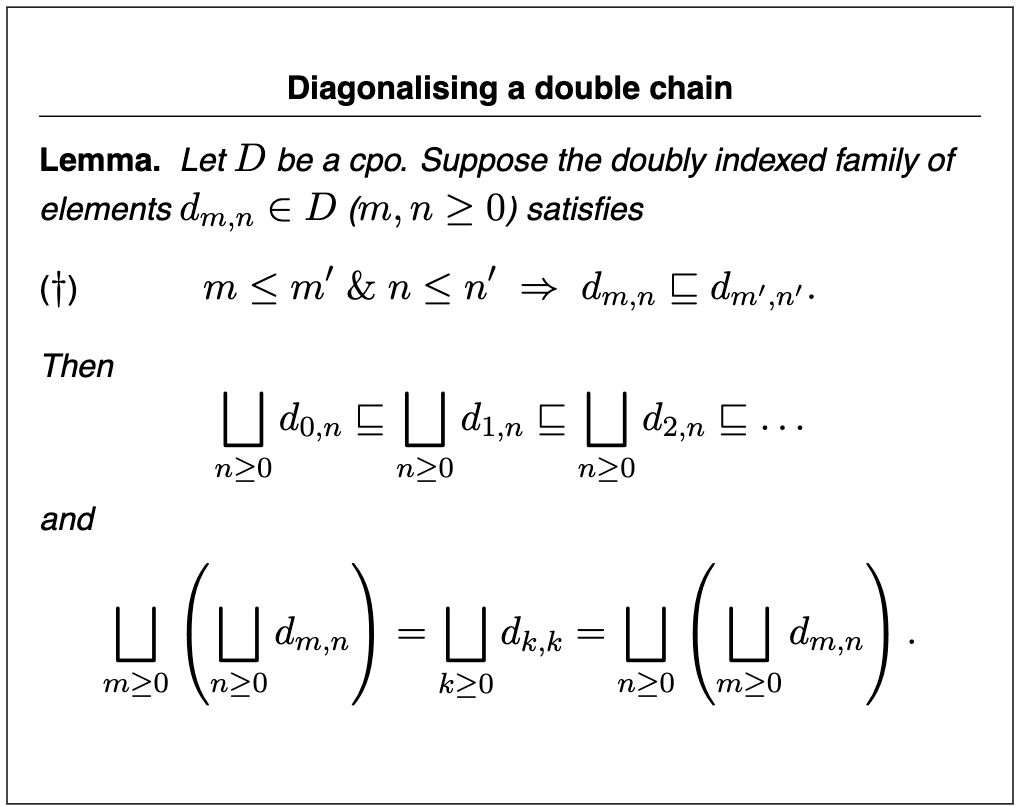

A countable, increasing chain in a poset

These elements do not have to necessarily be distinct! It could be one element repeating forever if we wanted to.

An upper bound for the chain is any

Our definition of

A complete partial order (i.e., cpo) is a poset where every chain has a lub. For instance, the poset

A domain is a cpo with a bottom element. i.e., it’s not just that every chain has a lub, but the whole set has an element that is “smaller than or equal to” all others. Hence, domain theory!

Now, let’s start talking about functions operating on cpos, like our function

- Monotonicity: the function preserves partial ordering.

- Continuity: for all chains in

, the lub of function calls of elements in a chain is the same as calling the function on the lub.

- Strictness: if

and have least elements, then must return when passed :

Example

Let’s consider the domain of natural numbers with an additional “top” element

. If we define a function , it’s monotonic ( , and ) and strict, but isn’t continuous. Consider the chain consisting of all natural numbers (but not itself) The lub of the chain of all natural numbers is straightforwardly , so . However, when evaluating , will always return (infinity is weird). Thus, we have , and .

Tarski’s fixed point theorem

A fixed point of a function

The pre-fixed point of a function

(by definition, set ) (since the fixed point is on the left side)

It’s important to note that the least pre-fixed point its itself a fixed point:

Tarski’s fixed point theorem states that a continuous function

Additionally, since

This theorem allows us to give denotational semantics for programs with recursive features! We can prove that a recursive function

Go team!

Domain theory, and operations therein

Now, we take a more abstract lens, focusing on domain theory. Let’s start with denotational semantics’ connection to domains:

In denotational semantics a programming construct (like a command, or an expression) is given a meaning by assigning to it an element in a “domain” of possible meanings. The programming construct is said to denote the element and the element to be a denotation of the construct. For example, commands in IMP are denoted by elements from the “domain” of partial functions, while numerals in IMP can denote elements of

. (Winskel 119)

And why do we need domain theory and, in particular, partial orders?

As the denotational semantics of IMP in Chapter 5 makes clear it can sometimes be necessary for “domains” to carry enough structure that they enable the solution of recursive equations. (Winskel 119)

Winskel §8 continues with redefinitions of cpos, monotonicity, and continuity. When we want to use domains for expressing (recursive) programming constructs, we want cpos and monotonic, continuous functions on those cpos, since recursive constructs can only be expressed in terms of cpos.

Isomorphisms

Before we get going, we quickly formally define an isomorphism. Given two cpos

and , a function is an isomorphism iff is a 1-1 correspondence such that This is monotonicity across domains. Basically, if an isomorphism exists between

and , they are isomorphic and are basically “equivalent” down to renaming.

Discrete domains

A discrete cpo is a set where the partial ordering relation is the identity. A chain of partial orderings is just the same element repeated over and over again. Basic values—bools, ints—form discrete cpos. Any function from a discrete cpo to another cpo is continuous.

Products

Let

which consists of

The partial ordering operation is done element-wise:

Lubs of chains are also calculated componentwise. The product of cpos is a cpo.

The projection function

We can tuple functions too. Given

This function is monotonic.

We can define the product of functions

Properties on general functions on products

- If

is a function from a cpo to a product of cpos, it’s monotonic/continuous iff is monotonic/continuous for all . - If

is a function from a product of cpos to a cpo, it’s monotonic/continuous iff it’s monotonic/continuous in each argument separately (i.e., with respect to each argument, while keeping others constant).

- This basically let’s us do commutativity:

Functions

The set of continuous functions of type

Application

Flat domains

To model ground types like

Thus,

Sums

Finally, we can form disjoint unions of cpo’s. Let

and partial order

This relies on injection functions

We can combine

TODO

There are lots of proofs that I’m eliding here, particularly proofs of function continuity and monotonicity. Be wary.