See also

The Stanford CS 164 handout and lecture slides on semantic analysis provides a broad overview of what semantic analysis is. This top-level summary draws from this.

Semantic analysis is the process of ensuring that the program has a well-defined meaning. This program might be syntactically correct, but it is a legal program? Semantic analysis encompasses a number of different steps:

- Checking if a variable is declared before use in right scope.

- Type-checking. See TAPL 15, 18: Subtyping & OOP, TAPL 20, 22, 23, 24: Recursive, universal, and existential types.

- Disambiguate variables (symbol table mapping vars to unique ids, de Brujin indices, etc.).

- Checking for

public/privateaccess.

Some forms of semantic analysis can be performed during parsing; attribute grammars. and syntax-directed translation in general, allow us to interleave program analysis into parsing. Most forms of semantic analysis operate on the AST of our programs.

Hindley-Milner type checking & inference

See also

This section builds on the detailed treatment and explanation of constraint-based type inference in

(i.e., no polymorphism) from TAPL §22. My Prelim review notes of the section are a helpful summary. This specific section takes from Tufts CS 105 lecture slides on Hindley-Milner type inference: constraint solving and instantiating and generalizing polymorphic types

Other connections

Keep in mind that the same equality constraint-based setup for doing type checking and inference here—i.e., equality solving—is also used in a bunch of other different domains (at least according to Federico):

- It’s part of how SAT and SMT solving works!

- It has some to do with e-graphs and equality saturation (see egglog), which has interactions between unification and union-find. In particular, an efficient way to store equivalences between sets of types, as we need to do here, is to use a union-find data structure, the same one that powers e-graphs! See here for more.

The commonality is that we set up some system of (in)equalities that we want to solve, and we want to perform some kind of unification (in the general sense, not specifically unification-the-type-inference-algorithm) to figure out what’s equal to what. In this case, we have equalities between types, which we solve with Hindley & Milner’s unification algorithm.

At a high-level, real-world type checking and inference algorithms involve two steps:

- Constraint generation: building equality constraints between different types.

- In inference, we might have type variables standing in for unknown types.

- In

λx -> x + 1,x : a1and1 : Nat. - Since

(+) : Num a => a -> a -> a, we require the type equalitiesNat ~ aanda1 ~ a.

- Constraint solving: figuring out a valid substitution for our type variables.

a1 = Nat

As described in TAPL 20, 22, 23, 24: Recursive, universal, and existential types, we can have polymorphic types, where we universally quantify over some type variable. This is generics! We distinguish between monotypes, which don’t have quantification, and polytypes (or type schemes), which do have quantification.

In a Hindley-Milner type system, we have two rules for where polymorphic types can appear, basically amounting to let-polymorphism:

- Expressions must have monotypes.

- If a polymorphic term is used in an expression, we must instantiate it to make it monomorphic.

- Things that are named (not including function arguments) must have polytypes. e.g., let bindings, function definitions, etc.

- If we see a let-binding, generalize the inferred type to make it polymorphic.

- In

let n = 3 in ...,(i.e., type scheme with 0 vars)

Constraint solving

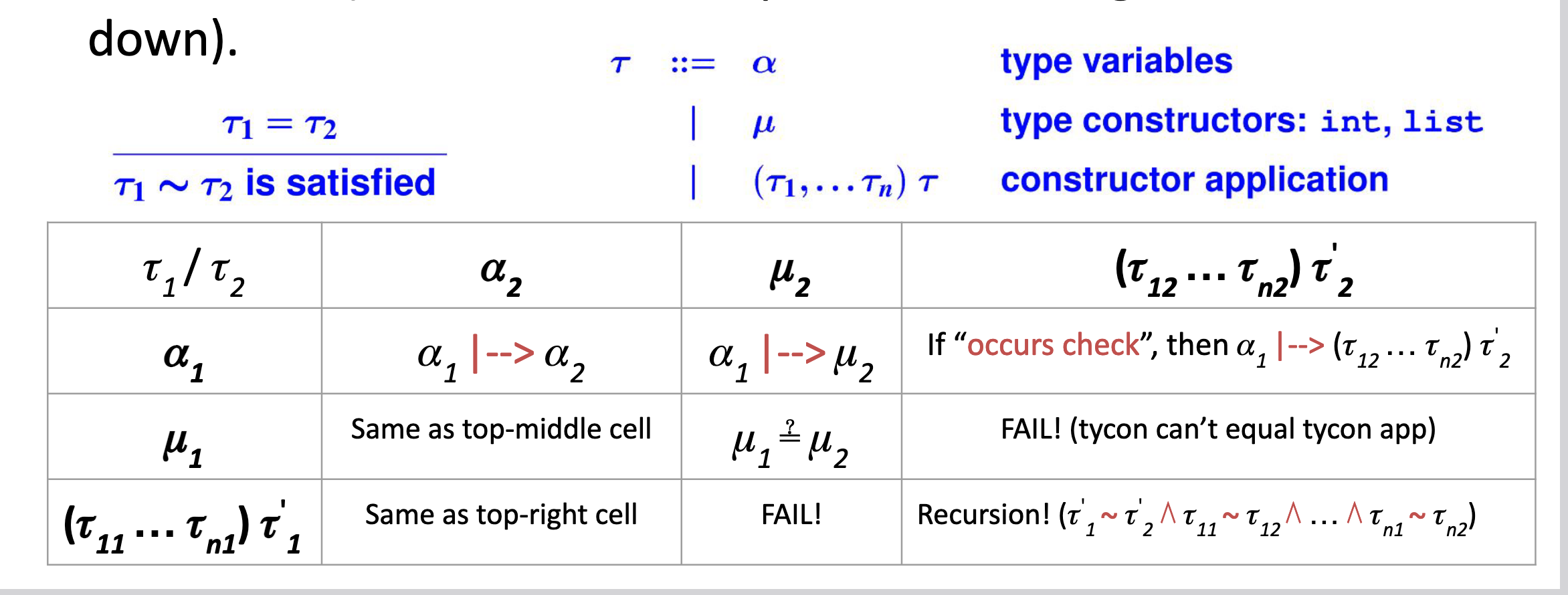

Constraint solving is basically the same as is described in TAPL §22. The main difference here is that in a Hindley-Milner type system, the syntax for types is slightly different. In particular, we separate monotypes and polytypes, and monotypes can also be type constructors.

We unify as follows (the lectures further divide

Instantiation and generalization

Because we have polytypes, we need to be able to instantiate them when used in expression position, and generalize expressions when they’re name-bound, introducing

When we see a polytyped term in an expression, we need to instantiate the polytype. However, we don’t actually need to add any constraints. Instead, we just generate fresh type variables to fill in all of our universally quantified holes. Later type-checking will then constrain these type variables with constraints.

Now, what if we want to let bind a monotyped expression

- Infer a type for

: . - This type may have some concrete types and some type variables.

- Replace type variables with quantification holes.

This is mentioned in TAPL §22! Steps 1-4 are generalization, step 5 is instantiation:

Efficiency improvements

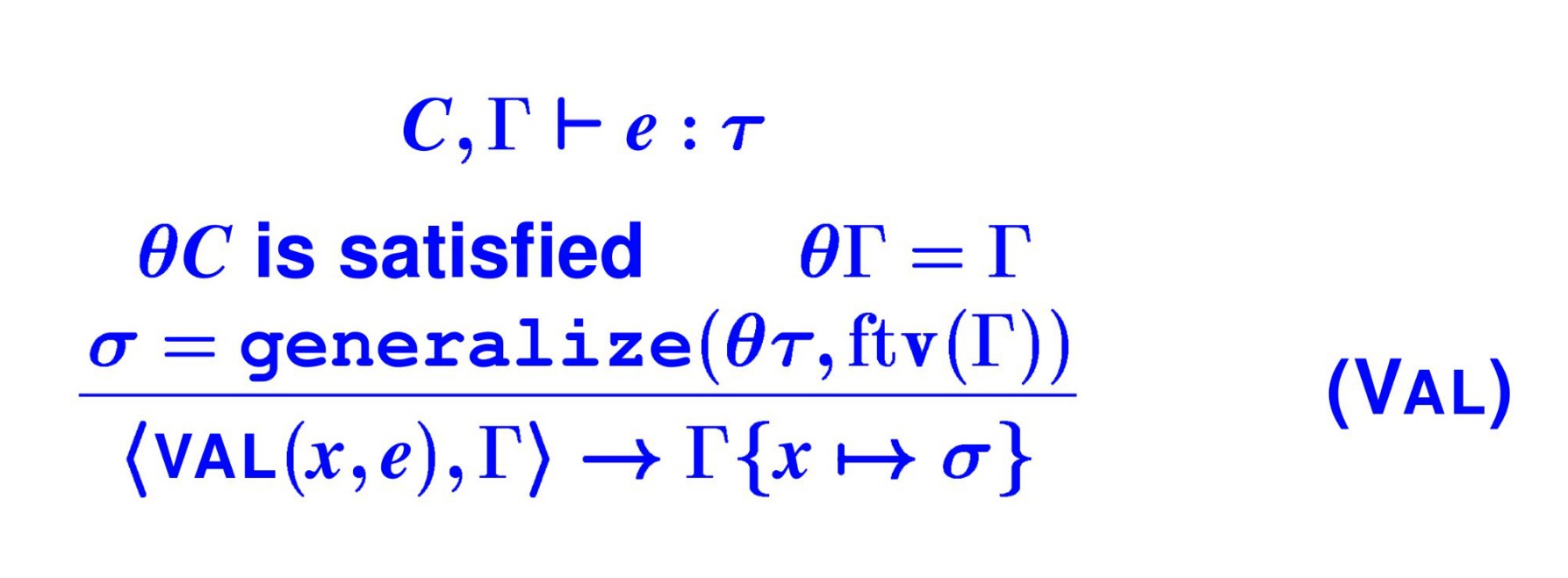

Another problem is that the let-bound variable definition is now type-checked on every occurrence of the variable—since we’re substituting it directly into the expression—which can cause exponential blow-up since the right-hand side can itself contain let bindings. To avoid, this, languages use a different reformulation of these rules that’s more efficient:

- Use constraint typing to find type

and constraint set for . - Use unification to find the principal type

of . - Generalize the remaining type variables in

. is no longer a type, but a type scheme, representing the structure of a type with respect to universally quantified holes.

- Extend the context to record this type scheme for the bound variable

, and type-check . - Whenever

occurs in , generate fresh type variables and use them to instantiate the type scheme for , yielding , which becomes the type of in that use. This is mostly linear, but can still exhibit exponential blowup if

lets are nested in the right-hand sides of otherlets.Link to originalSee also

For more on doing constraint-based type checking and inference with generics-style polymorphism, see Instantiation and generalization.

The slides write this out like so: